How to Read Files From Excel in Python

Making Sense of Big Information

Practise Y'all Read Excel Files with Python? There is a 1000x Faster Way.

In this article, I'll testify you five ways to load data in Python. Achieving a speedup of 3 orders of magnitude.

![]()

Equally a Python user, I use excel files to load/shop data as concern people similar to share data in excel or csv format. Unfortunately, Python is particularly slow with Excel files.

In this article, I'll show you five ways to load data in Python. In the end, nosotros'll attain a speedup of 3 orders of magnitude. It'll be lightning-fast.

Edit (18/07/2021): I found a way to make the process 5 times faster (resulting in a 5000x speedup). I added it as a bonus at the end of the commodity.

Experimental Setup

Let's imagine that we want to load 10 Exc due east l files with 20000 rows and 25 columns (that'south around 70MB in full). This is a representative instance where you want to load transactional data from an ERP (SAP) to Python to perform some analysis.

Let's populate this dummy information and import the required libraries (we'll hash out pickle and joblib later on in the article).

import pandas equally pd

import numpy as np

from joblib import Parallel, delayed

import time for file_number in range(10):

values = np.random.uniform(size=(20000,25))

pd.DataFrame(values).to_csv(f"Dummy {file_number}.csv")

pd.DataFrame(values).to_excel(f"Dummy {file_number}.xlsx")

pd.DataFrame(values).to_pickle(f"Dummy {file_number}.pickle")

5 Ways to Load Information in Python

Idea #one: Load an Excel File in Python

Let's start with a straightforward way to load these files. Nosotros'll create a first Pandas Dataframe and then append each Excel file to information technology.

start = time.time()

df = pd.read_excel("Dummy 0.xlsx")

for file_number in range(one,10):

df.suspend(pd.read_excel(f"Dummy {file_number}.xlsx"))

finish = time.time()

print("Excel:", end — commencement) >> Excel: 53.iv

It takes around 50 seconds to run. Pretty boring.

Idea #2: Use CSVs rather than Excel Files

Permit's at present imagine that we saved these files as .csv (rather than .xlsx) from our ERP/Organisation/SAP.

start = time.fourth dimension()

df = pd.read_csv("Dummy 0.csv")

for file_number in range(1,10):

df.append(pd.read_csv(f"Dummy {file_number}.csv"))

terminate = time.time()

print("CSV:", terminate — start) >> CSV: 0.632

We can at present load these files in 0.63 seconds. That's about 10 times faster!

Python loads CSV files 100 times faster than Excel files. Use CSVs.

Con: csv files are nearly always bigger than .xlsx files. In this example .csv files are 9.5MB, whereas .xlsx are half-dozen.4MB.

Idea #three: Smarter Pandas DataFrames Creation

We can speed up our process by irresolute the style we create our pandas DataFrames. Instead of appending each file to an existing DataFrame,

- We load each DataFrame independently in a list.

- Then concatenate the whole listing in a single DataFrame.

start = time.time()

df = []

for file_number in range(10):

temp = pd.read_csv(f"Dummy {file_number}.csv")

df.append(temp)

df = pd.concat(df, ignore_index=True)

end = time.time()

impress("CSV2:", stop — start) >> CSV2: 0.619

We reduced the time by a few percent. Based on my experience, this trick volition get useful when you deal with bigger Dataframes (df >> 100MB).

Idea #4: Parallelize CSV Imports with Joblib

We desire to load x files in Python. Instead of loading each file one past one, why not loading them all, at once, in parallel?

We tin practice this easily using joblib.

kickoff = time.time()

def loop(file_number):

return pd.read_csv(f"Dummy {file_number}.csv")

df = Parallel(n_jobs=-1, verbose=10)(delayed(loop)(file_number) for file_number in range(x))

df = pd.concat(df, ignore_index=Truthful)

cease = time.time()

print("CSV//:", end — outset) >> CSV//: 0.386

That'south nearly twice every bit fast as the unmarried core version. However, as a general dominion, practise non look to speed upwardly your processes eightfold by using 8 cores (here, I got x2 speed up by using 8 cores on a Mac Air using the new M1 chip).

Unproblematic Paralellization in Python with Joblib

Joblib is a simple Python library that allows y'all to run a office in //. In practice, joblib works as a list comprehension. Except each iteration is performed past a unlike thread. Here's an example.

def loop(file_number):

return pd.read_csv(f"Dummy {file_number}.csv")

df = Parallel(n_jobs=-1, verbose=10)(delayed(loop)(file_number) for file_number in range(10)) #equivalent to

df = [loop(file_number) for file_number in range(x)]

Thought #five: Utilize Pickle Files

Yous tin can get (much) faster by storing data in pickle files — a specific format used past Python — rather than .csv files.

Con: y'all won't be able to manually open a pickle file and run into what'due south in information technology.

beginning = fourth dimension.time()

def loop(file_number):

return pd.read_pickle(f"Dummy {file_number}.pickle")

df = Parallel(n_jobs=-1, verbose=ten)(delayed(loop)(file_number) for file_number in range(x))

df = pd.concat(df, ignore_index=True)

end = fourth dimension.time()

print("Pickle//:", end — kickoff) >> Pickle//: 0.072

We just cutting the running time by 80%!

In full general, it is much faster to work with pickle files than csv files. Just, on the other hand, pickles files ordinarily accept more infinite on your drive (non in this specific instance).

In exercise, you will not be able to extract data from a system directly in pickle files.

I would advise using pickles in the two following cases:

- You want to relieve information from ane of your Python processes (and yous don't plan on opening it on Excel) to apply it afterward/in another process. Save your Dataframes as pickles instead of .csv

- Yous need to reload the same file(southward) multiple times. The offset time you open a file, salvage information technology as a pickle and so that you lot volition be able to load the pickle version directly adjacent time.

Instance: Imagine that you use transactional monthly information (each month you load a new month of information). Y'all can salve all historical data as .pickle and, each time you receive a new file, you tin can load it once as a .csv and then keep it as a .pickle for the adjacent time.

Bonus: Loading Excel Files in Parallel

Let's imagine that y'all received excel files and that yous have no other choice only to load them every bit is. You tin can also use joblib to parallelize this. Compared to our pickle lawmaking from above, we but need to update the loop function.

start = time.time()

def loop(file_number):

return pd.read_excel(f"Dummy {file_number}.xlsx")

df = Parallel(n_jobs=-1, verbose=x)(delayed(loop)(file_number) for file_number in range(10))

df = pd.concat(df, ignore_index=Truthful)

cease = time.time()

print("Excel//:", stop - get-go) >> 13.45

Nosotros could reduce the loading time by seventy% (from 50 seconds to thirteen seconds).

You can also use this loop to create pickle files on the wing. So that, next time you load these files, you'll be able to achieve lightning fast loading times.

def loop(file_number):

temp = pd.read_excel(f"Dummy {file_number}.xlsx")

temp.to_pickle(f"Dummy {file_number}.pickle")

return temp Recap

By loading pickle files in parallel, we decreased the loading time from 50 seconds to less than a tenth of a second.

- Excel: 50 seconds

- CSV: 0.63 seconds

- Smarter CSV: 0.62 seconds

- CSV in //: 0.34 seconds

- Pickle in //: 0.07 seconds

- Excel in //: xiii.5 seconds

Bonus #ii: 4x Faster Parallelization

Joblib allows to change the parallelization backend to remove some overheads. You can do this by giving prefer="threads" to Parallel.

We obtain a speed of around 0.0096 seconds (over 50 runs with a 2021 MacBook Air).

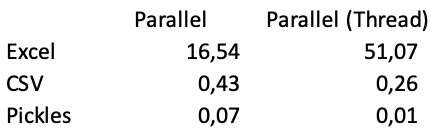

Using prefer="threads" with CSV and Excel parallelization gives the following results.

Every bit you tin see using the "Thread" backend results in a worse score when reading Excel files. Merely to an astonishing performance with pickles (it takes 50 seconds to load Excel files 1 by i, and only 0.01 seconds to load the information reading pickles files in //).

👉 Let's connect on LinkedIn!

About the Author

Nicolas Vandeput is a supply chain data scientist specialized in demand forecasting and inventory optimization. He founded his consultancy company SupChains in 2016 and co-founded SKU Science — a fast, unproblematic, and affordable demand forecasting platform — in 2018. Passionate nigh pedagogy, Nicolas is both an avid learner and enjoys teaching at universities: he has taught forecasting and inventory optimization to master students since 2014 in Brussels, Belgium. Since 2020 he is too educational activity both subjects at CentraleSupelec, Paris, France. He published Data Science for Supply Chain Forecasting in 2018 (2nd edition in 2021) and Inventory Optimization: Models and Simulations in 2020.

haleyafriallifuld.blogspot.com

Source: https://towardsdatascience.com/read-excel-files-with-python-1000x-faster-407d07ad0ed8

0 Response to "How to Read Files From Excel in Python"

Postar um comentário